Gabriel Guralnick

My goal is to use AI to better understand human cognition and improve the way we interact with technology.

My goal is to use AI to better understand human cognition and improve the way we interact with technology.

January 2026 - Present

Imbue

November 2024 - December 2025

Abacus.AI

I worked on LLM benchmarking and agent evaluations. I was the primary maintainer of LiveBench, a popular general-purpose LLM benchmark that evaluates models on reasoning, mathematics, language, coding, and data analysis capabilities. I also developed LiveSWEBench, a novel benchmark for AI coding assistants, which evaluated models based on their performance when working on their own or in collaboration with a human developer to solve real-world coding tasks. Most recently, I helped design and develop an evaluation suite for DeepAgent, a generalist agent with full computer access. This involved creating complex LLM-as-a-judge systems to evaluate the quality of variety of outputs like research reports or websites.

September - December 2023

University of Toronto

I worked as a teaching assistant for the software design class. I mentored groups of second-year students as they developed their term projects. I helped students define goals, brainstorm ideas, and implement their solutions to complex problems in the creditors' insurance industry.

May - August 2023

Konrad Group

I worked on a variety of proof-of-concept research projects involving multimodal machine learning pipelines and large language models. The most impressive of these was an application for automatically generating Agile-style tickets from a website screenshot that incorporated image segmentation and captioning models and an LLM. I worked extensively both with LLM APIs and self-hosted and fine-tuned models.

May - August 2022

Konrad Group

I contributed to the maintenance and development of KGPortal, the primary internal web application used by Konrad employees to manage projects and other internal tasks. I helped maintain and improve both the frontend and backend components, implementing new UI components and full-page redesigns as part of a new UI library. I also led development of an entirely new secure file upload service which involved updating the existing database and backend and adding integration with AWS S3 and Cron to support the new functionality.

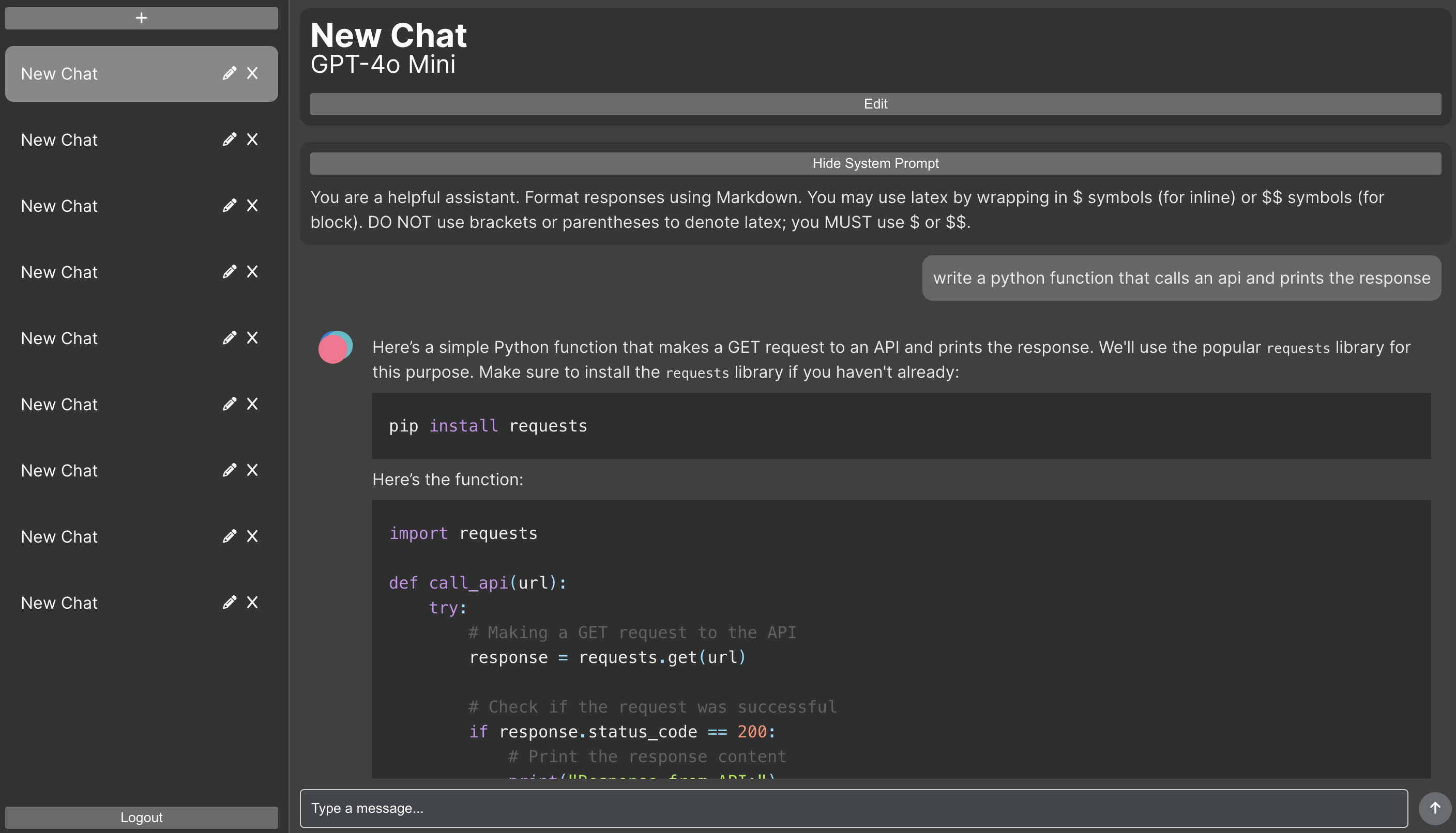

LLM Playground Implemented full-stack application using React and FastAPI to interact with large language models

LLM Playground Implemented full-stack application using React and FastAPI to interact with large language models  Social Learning in Multi-Agent Systems Collaborated with a professor and several peers to apply insights from research on social networks to multi-agent reinforcement learning.

Social Learning in Multi-Agent Systems Collaborated with a professor and several peers to apply insights from research on social networks to multi-agent reinforcement learning.  Content Market Model of Online Social Networks Implemented and simulated model of online social networks as a content market to evaluate theoretical accuracy.

Content Market Model of Online Social Networks Implemented and simulated model of online social networks as a content market to evaluate theoretical accuracy.  Fine-Tuning Stable Diffusion Evaluated the efficacy of LoRA, Textual Inversion, and Dreambooth for introducing new concepts to diffusion image generators

Fine-Tuning Stable Diffusion Evaluated the efficacy of LoRA, Textual Inversion, and Dreambooth for introducing new concepts to diffusion image generators  GRIMM AutoFinance Created and deployed a web application to help car buyers save time in the dealership

GRIMM AutoFinance Created and deployed a web application to help car buyers save time in the dealership  Wikipedia 6 Degrees of Separation Represented Wikipedia article categories using a graph to model connections and implemented the PageRank algorithm to judge article importance and relevance.

Wikipedia 6 Degrees of Separation Represented Wikipedia article categories using a graph to model connections and implemented the PageRank algorithm to judge article importance and relevance.  Climate Change Snowfall Effects Modeled the relationship between the Global Land-Ocean Temperature Index and US Snowfall by region using Pandas and Plotly.

Climate Change Snowfall Effects Modeled the relationship between the Global Land-Ocean Temperature Index and US Snowfall by region using Pandas and Plotly. © 2026 Gabriel Guralnick